Insights

Databricks

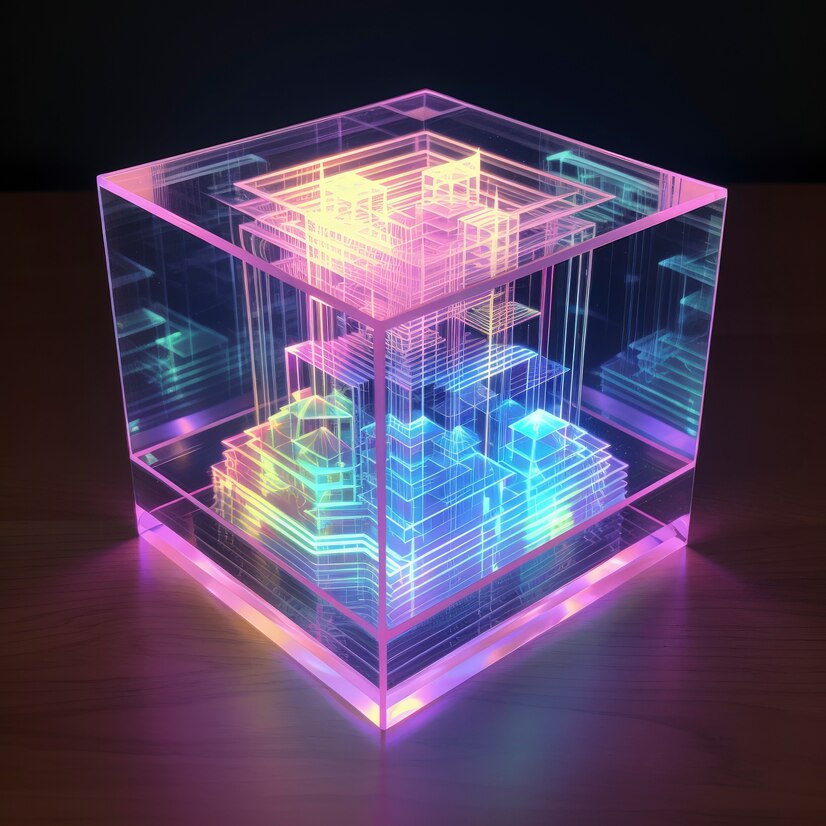

AI thrives on high-quality, well-structured data, but raw data is often messy, unorganized, and scattered across multiple sources. Transforming this data into actionable insights is crucial for AI success.

That’s where Databricks, Matillion, and Azure Data Factory come in—three powerful tools that, when combined, streamline data transformation and accelerate AI initiatives.

Without clean and structured data, even the most advanced AI models will struggle to deliver meaningful insights. These tools play a pivotal role in making data AI-ready

A unified analytics platform built on Apache Spark, ideal for data engineering, machine learning, and large-scale AI workloads.

A cloud-native data integration platform that simplifies ETL/ELT processes, making data transformation seamless.

From Data Ingestion to Transformation to AI

From data ingestion to transformation to AI implementation, these tools work together effortlessly.

- Handle large datasets with ease, thanks to cloud-native architectures.

- Low-code interfaces in Matillion & Azure Data Factory simplify data transformation.

- Well-structured, clean data ensures higher accuracy and efficiency in AI models.

- At TekLink, we specialize in helping businesses harness Databricks, Matillion, and Azure Data Factory to streamline data pipelines, enhance data quality, and build scalable AI solutions.

- Whether you’re just getting started or looking to optimize existing processes, our experts can guide you every step of the way.

- Data from on-premises, cloud, or SaaS sources into Databricks or Matillion for transformation.

- Transforms massive datasets at scale using Apache Spark, ensuring high-performance AI workloads.

- AI frameworks like TensorFlow and PyTorch, allowing data scientists to build and deploy models effortlessly.

- Azure Data Factory automates end-to-end data pipelines, ensuring consistent and reliable data flow.

We’re showcasing interactive demos and real-world use cases where Databricks and Matillion work together to streamline ETL pipelines, unify data silos, and enable faster analytics at scale.